4 min read

Demystifying Edge Computing: Part 2 – What is the EDGE

Edge Computing IoT, more specifically for this post, IIoT (Industrial Internet of Things) is probably the hottest buzzword today. The whole...

Build intelligent, data-driven capabilities that turn raw information into insights, automation, and smarter decision-making across your organization.

Modernize, secure, and operationalize your cloud environment with solutions that strengthen resilience, reduce risk, and improve IT performance.

Deliver modern applications and connected IoT solutions that enhance operations, streamline workflows, and create seamless digital experiences.

High-impact IT project execution from planning to delivery, aligned with business goals and designed for predictable outcomes.

Structured change management and M&A support that helps teams adapt, reduce disruption, and successfully navigate complex transitions.

Cloud-first IT operations that streamline cost, strengthen security, and provide modern, scalable infrastructure for growing teams.

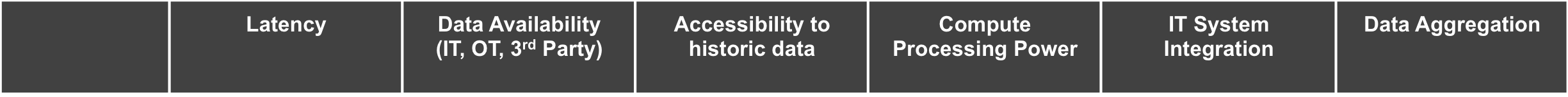

We have spoken about edge, fog and to a much lesser degree cloud computing. Each of them have pros and cons, but at the end of the day why collect data anywhere if you are not going to do analytics somewhere? In my opinion, this is where the rubber meets the road. You absolutely need to introduce machine learning, and AI for your data to transform itself into a descriptive, predictive and/or prescriptive outcomes driving business value.

The challenge becomes identifying the location to run your analytics. Let’s recap the basics:

Edge Computing:

Fog Computing:

Cloud Computing:

As I stated throughout this blog series, the location of the intelligence will be correlated to the use case and expected results. However, here are a couple of pointers to consider as it relates to the “where, when, and why” for descriptive, predictive, and prescriptive analytics.

Here are quick definitions of each:

The matrix below can be used for an analytics location decision. However, the use case will ultimately dictate the location to run analytics.

Examples of the use cases that should be considered at each level:

Edge:

Fog:

Cloud:

2 min read

Edge Computing – Examples using Google Interestingly enough, edge computing typically is related to an IoT project; IoT solutions become Big...

1 min read

Device Management at the EDGE In our earlier post we’ve touched on this point a few times, so let’s dig a little deeper into device management. In...