Edge Computing

IoT, more specifically for this post, IIoT (Industrial Internet of Things) is probably the hottest buzzword today. The whole premise of IIoT is networked devices that are connected and interact and exchange data. In most cases, the networked device is also called an Edge Device. In IIoT an edge device is a device with network accessible actuators, sensors, and even moving assets (locomotives, cars, airplanes, ships, etc.).

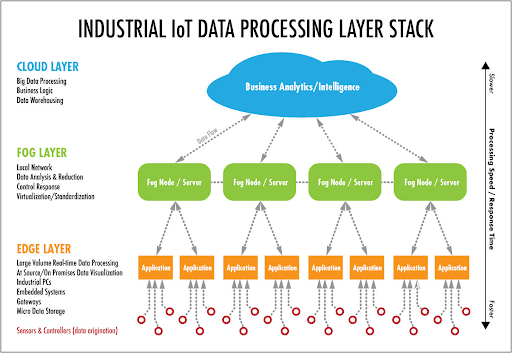

Edge Computing is the ability to collect data (temporarily store it), filter, aggregate, and send data to the cloud or data center. Additionally, Edge Computing includes the ability to run analytics (make decisions) on the device without risking the latency of sending the data to the cloud for a decision and sending the decision back to the device for an action. This becomes VERY important when you factor in intermittent connectivity, low bandwidth (and/or high cost for additional bandwidth), immediacy of insights, and security related concerns.

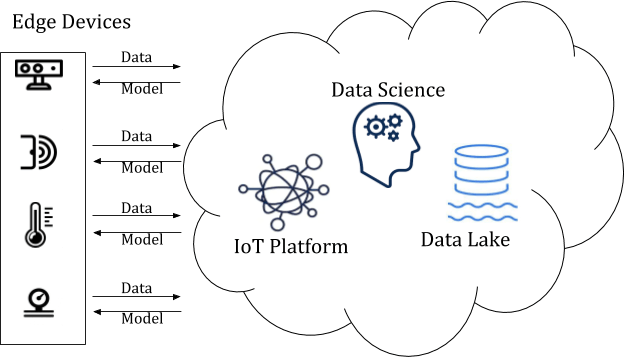

In the illustration above the following is happening:

-

Edge Device (connected sensors) are capturing Data

-

The data is filtered and aggregated at the Edge

-

- The edge device (connected sensors) have an Operating System and small applications running on them. These applications can include functionality like:

- Filtering OUT data

- Aggregating data

- The data being filtered out as well as the aggregation window is configured based on the use case. For example, is data only relevant for 5 minutes? If so, data that comes in after 5 minutes (late arriving) can be filtered out

- The edge device (connected sensors) have an Operating System and small applications running on them. These applications can include functionality like:

-

-

Over the network, most likely using MQTT, a reduced pay load is sent to the IoT platform (analytics, KPIs, Rules, etc. can happen here)

-

The data is pushed to a Data Lake (cold storage)

-

Data Scientists build models that output insights and queue them back to the IoT Platform and/or device management software

-

- If the model exists, then the model might be retrained

-

-

The Iot Platform and/or device management software pushes the model back to the Edge Device (connected sensor)

-

Data is analyzed at the edge device (connected sensor) and an action is taken. For example: an anomaly with large catastrophic consequences is detected and the machine it is monitoring is shut down immediately

FOG Computing

Do all edge devices have the ability to do all of the above? No, until recently (last 5 years or so) most devices haven’t been smart (meaning connected or having the compute power to do anything interesting). For these more limited devices we introduce a new term, Fog Computing. Fog Computing, defined by Wikipedia, as extending cloud computing to the edge of an enterprise’s network. Also known as edge computing or fogging, fog computing facilitates the operation of compute, storage, and networking services between end devices and cloud computing data centers. While edge computing is typically referred to the location where services are instantiated, fog computing implies distribution of the communication, computation, and storage resources and services on or close to devices and systems in the control of end-users. That is a mouthful to basically say Fog devices require a gateway for some level of compute and connectivity.

Fog Computing and edge computing sound very similar since they bring the intelligence and processing closer to the source. However, the key difference between edge and fog is the location of the intelligence and compute power. In a fog architecture the intelligence/compute power is on the gateway.

The illustration above shows the relationship between the Edge and Fog layer. This is a common architecture within IIoT because it allows for flexibility and scalability as it relates to analytics. As you can see, the further you get from the edge device the slower the response/processing time you will experience. The use case(s) should dictate where you do your analytics, as there are pros/cons for each layer. For example, doing analytics in the cloud results in the longest latency, but you will have the richest dataset to build and execute machine-learning algorithms on. In contrast, running analytics at the edge results in almost no latency, but the depth of the insights gleaned from analytics will be minimal in comparison due to the less rich dataset.

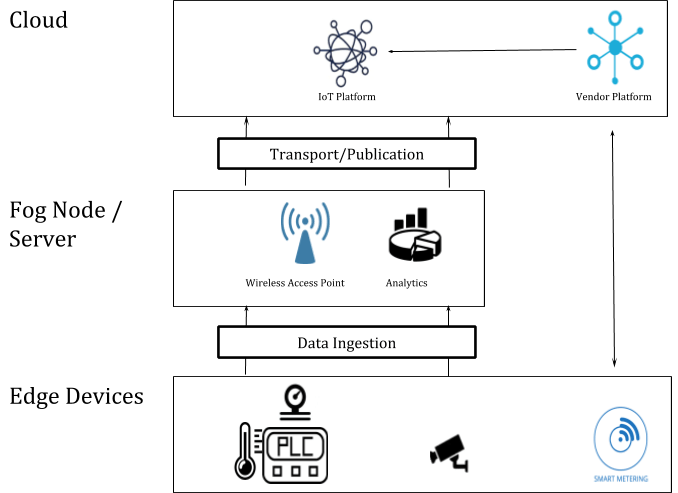

The following is an illustration that uses both Fog and Edge computing as part of its architecture.

In this example we are taking data off of a PLC, a surveillance camera and a smart meter:

-

The PLC is capturing several data points including temperature and pressure (for this example). For the sake of this example, let’s assume that it is communicating via OPC UA to the Fog Node/Gateway Server, which has software running.

-

- The Fog Node/Gateway server allows the internal network (that the PLC is connected to) to the outside world, so it can connect to the cloud

- The software running on the Gateway Server includes a data historian (to hold data temporarily), Applications (SDK) and the ability to run machine learning

- The data is then published (MQTT) where the cloud IoT Platform will subscribe and process the data

- The IoT Platform will have analytics, visualizations, KPIs, Rules, etc. running configured for multiple use cases. Additionally, the platform or outside of the platform (data lake) machine learning models can be developed. Models that are run in the Fog are pushed down (along with all dependencies) to the Fog and compiled (as required)

- Although not illustrated it would be possible to communicate back to the PLC to update a parameter (assuming there was a use case and the additional functionality was built)

-

-

The surveillance camera would operate in a very similar fashion, however, the big difference would be related to the amount of data that would be sent up to the IoT Platform, this would depend on bandwidth and use cases. For most picture/video related use cases you will want to do as much processing at the edge as possible for the following reasons:

-

- It’s very costly to send a continuous video feed from the Edge/Fog to the cloud.

- Costly both in dollars, and also bandwidth

- Use cases related to video and pictures require an immediate action, there is low tolerance for latency

- Example use case:

- Quality

- Safety

- Example use case:

- It’s very costly to send a continuous video feed from the Edge/Fog to the cloud.

-

-

In the illustration above, I also included a smart meter. For the purpose of this example, assume that this smart meter can be configured to sample data at a specified rate and rules can be configured to take action upon upper/lower thresholds, finally it is connected to the cloud wirelessly. Let’s also assume that the smart meter comes from a vendor that has a direct connection to it’s own cloud platform.

-

- If there is a preference to ensure that all edge data flows through a single entry point (IoT Platform) then you can write an API that pulls the processed data (or whatever data the vendor makes available) to the IoT platform

- In this type of ecosystem, you are limited to what the vendor allows you to do; however, the vendor makes firmware updates and configuration updates simple and scalable.

-