1 min read

Phi-3 Fine-Tuning and New AI Models Now Available in Azure

Exciting updates for AI developers and businesses: the fine-tuning of Phi-3 mini and medium models is now available in Azure, making AI even more...

Build intelligent, data-driven capabilities that turn raw information into insights, automation, and smarter decision-making across your organization.

Modernize, secure, and operationalize your cloud environment with solutions that strengthen resilience, reduce risk, and improve IT performance.

Deliver modern applications and connected IoT solutions that enhance operations, streamline workflows, and create seamless digital experiences.

High-impact IT project execution from planning to delivery, aligned with business goals and designed for predictable outcomes.

Structured change management and M&A support that helps teams adapt, reduce disruption, and successfully navigate complex transitions.

Cloud-first IT operations that streamline cost, strengthen security, and provide modern, scalable infrastructure for growing teams.

1 min read

Admin : January 7, 2026

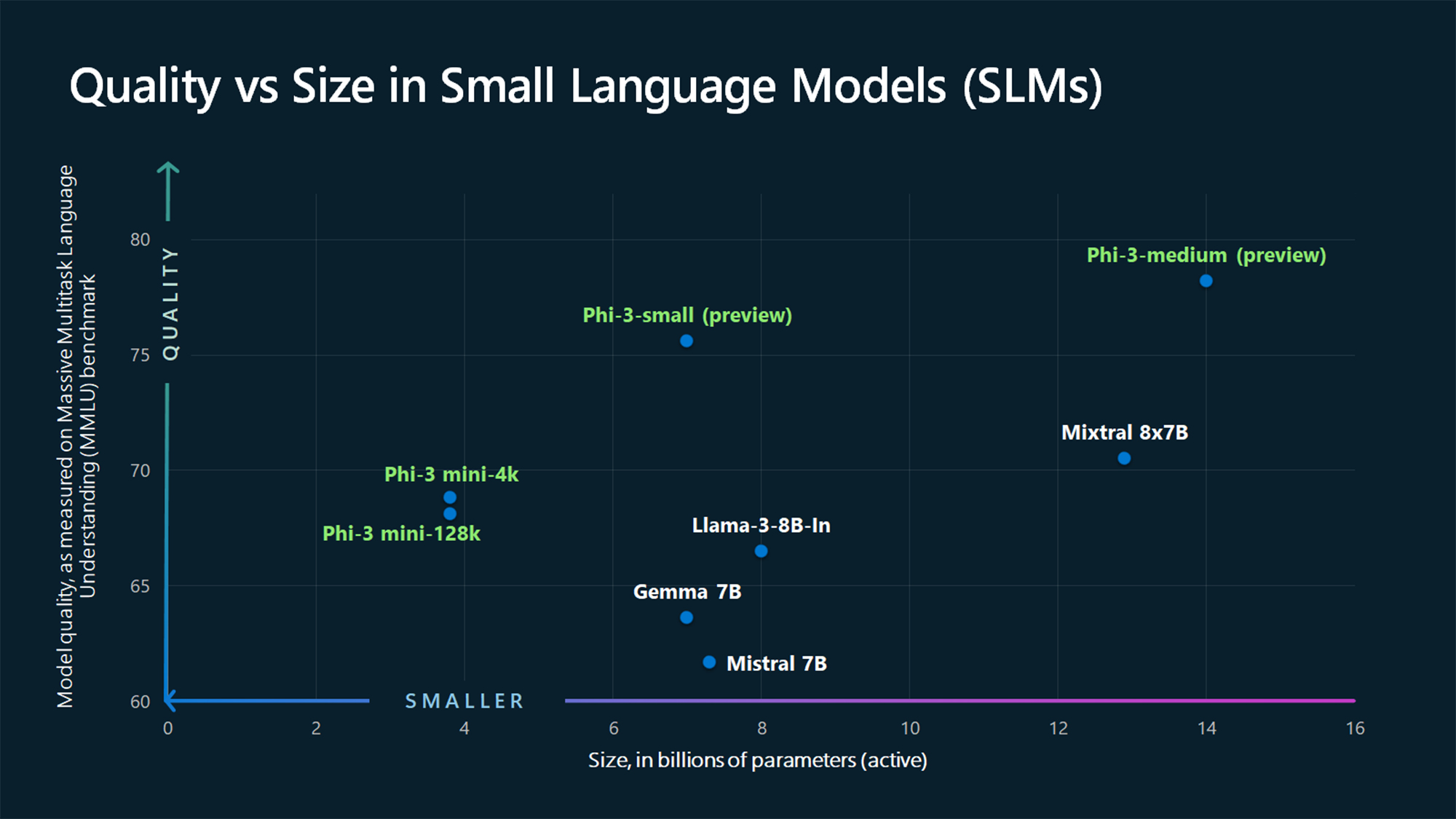

Microsoft continues to make strides in AI with the release of Phi-3, the latest iteration of its Small Language Models (SLMs). While the tech world often marvels at the capabilities of Large Language Models (LLMs) like GPT-4, which boast trillions of parameters, Phi-3 stands out for its focus on efficiency and accessibility.

Phi-3, with its modest 4 billion parameters, represents a shift toward more targeted and authoritative answers. Unlike their larger counterparts, SLMs are tailored to handle specific tasks with precision, making them ideal for scenarios where connectivity is limited or where resources are constrained, such as edge systems and mobile devices.

But why should you care about Phi-3 and the rise of Small Language Models?

1. Authority and Efficiency: Phi-3 is engineered to provide authoritative answers efficiently. By honing in on specific datasets and tasks, these models deliver accurate results without the need for massive computational resources. This means faster response times and lower costs for organizations leveraging AI.

2. Accessibility: With a focus on lightweight design, Phi-3 is more accessible than its larger counterparts. Its reduced computational demands make it well-suited for deployment on mobile devices, bringing advanced AI capabilities to a wider audience.

3. Complementary to LLMs: Phi-3 isn’t meant to replace Large Language Models like GPT-4; rather, it complements them. By pairing SLMs with LLMs, developers can harness the strengths of both models to deliver the most accurate and comprehensive results for their applications.

Phi-3 is an integral part of Microsoft’s Azure AI platform, which offers a diverse array of over 50 models. This versatility empowers organizations to choose the model that best fits their specific needs and applications, providing flexibility and scalability in AI deployment.

One of the most compelling aspects of Phi-3 is its cost-effectiveness. Compared to the hefty price tag associated with deploying and maintaining LLMs like GPT-4, SLMs offer a more economical solution. This affordability opens doors for organizations that may have previously hesitated to invest in AI services due to cost concerns.

With its focus on efficiency, accessibility, and cost-effectiveness, Phi-3 aims to democratize AI by making advanced language processing capabilities more attainable for organizations of all sizes. As the AI landscape continues to evolve, SLMs like Phi-3 will undoubtedly play a crucial role in shaping intelligent technology.

If you’re interested in following up on this with someone on our team, please feel free to reach out. We love this stuff.

1 min read

Exciting updates for AI developers and businesses: the fine-tuning of Phi-3 mini and medium models is now available in Azure, making AI even more...

1 min read

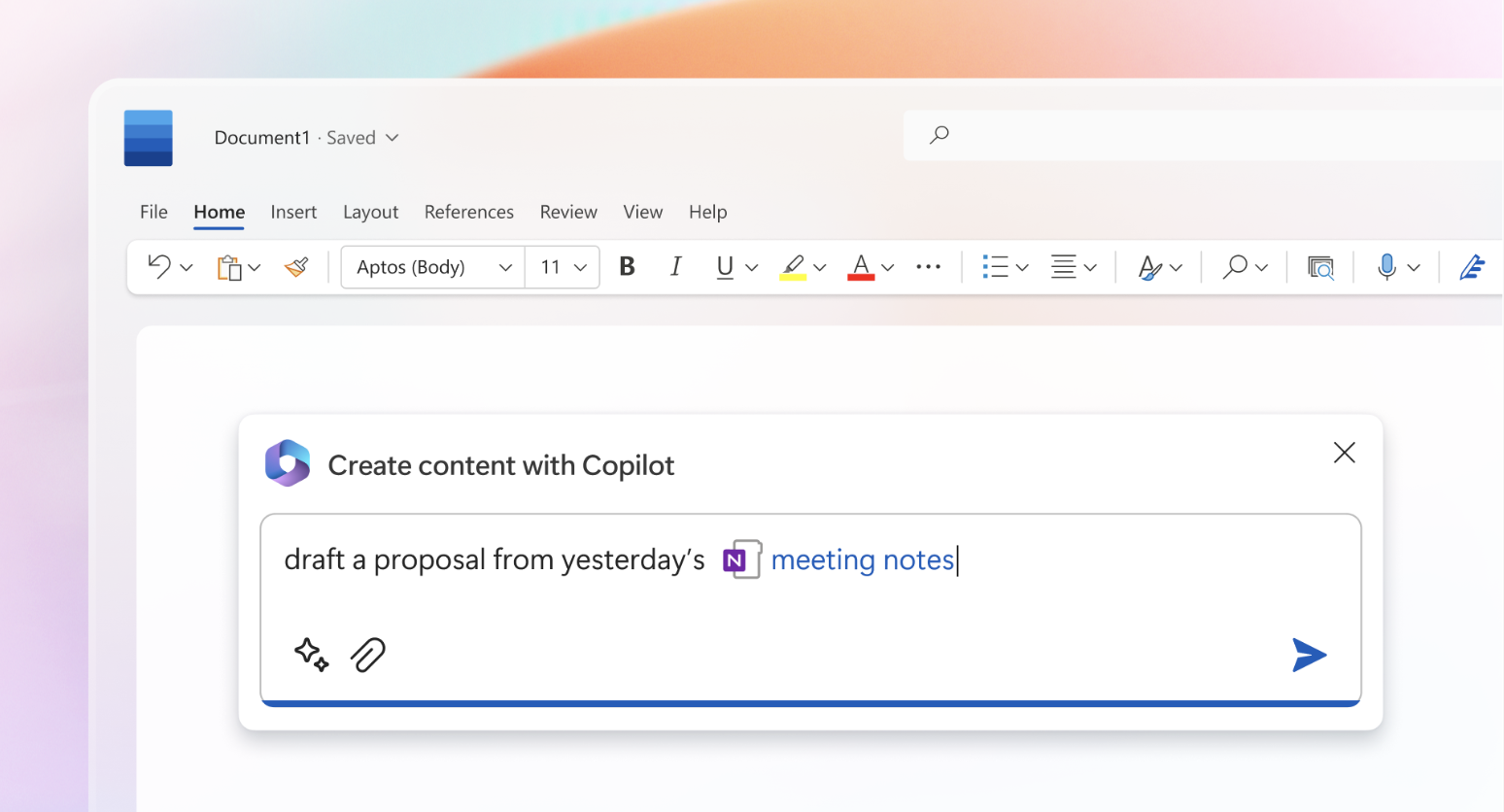

The Large Language Models (LLM) and GPT capabilities are coming to the Microsoft 365 suite of products. These new AI capabilities will be called...

1 min read

Microsoft has officially released the next generation of Phi models, a set of Small Language Models (SLMs) designed for efficiency, speed, and...